Oktay from VPSDIME also as known as the CEO of one of the most popular backup VPS service providers, Backupsy, has launched his new product line in Los Angeles. Their new nodes are built even more powerful than before with pure SSD RAID10 arrays. Meanwhile, Oktay would like to offer even a more special deal for all VPS MATEs. With this special Coupon CODE, you will get a FREE upgrade from 30G SSD to 50G SSD in Raid 10 and the $5 installation fee is waived. CPU :X5660 6GB Memory 30GHDD = > 30GB SSD => 50GB SSD in Raid 10 2TB Traffic Limit 1Gbps Uplink 1 IP Address $5Installation Charge VPSMATE -> 50% recurring discount Free installation Charge & 50G SSD FREE UPGRADE With this special coupon, it provides you a FREE UPGRADE on storage to 50G SSD. BUY LINK root@testip:~# df -h Filesystem Size Used Avail Use% Mounted on /dev/simfs 50G 1.7G 49G 4% / tmpfs 615M 24K 615M 1% /run tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 1.3G 0 1.3G 0% /run/shm root@testip:~# Memory Oversale Test Allocated: 6544M Allocated: 6545M Allocated: 6546M Allocated: 6547M Allocated: 6548M Allocated: 6549M Allocated: 6550M Allocated: 6551M Allocated: 6552M Allocated: 6553M Allocated: 6554M Allocated: 6555M Allocated: 6556M Allocated: 6557M Allocated: 6558M Allocated: 6559M Allocated: 6560M Allocated: 6561M Allocated: 6562M Allocated: 6563M Allocated: 6564M Allocated: 6565M Allocated: 6566M Allocated: 6567M Allocated: 6568M Allocated: 6569M Allocated: 6570M bash: line 3: 8463 Killed ./a.out Simple I/O & Network Bench Fetching System Informaion _,met$$$gg. root@testip ,g$$$$$$$$P. OS: Debian ,g$P"" """Y$.". Kernel: x86_64 Linux 2.6.32-042stab088.4 ,$P' `$$. Uptime: 48m ',$P ,ggs. `$b: Packages: 203 `d$' ,$P"' .…

Q. How do I find out all large files in a directory?A. There is no single command that can be used to list all large files. But, with the help of find command and shell pipes, you can easily list all large files. Usage: find [-H] [-L] [-P] [-Olevel] [-D help|tree|search|stat|rates|opt|exec] [path...] [expression] default path is the current directory; default expression is -print expression may consist of: operators, options, tests, and actions: operators (decreasing precedence; -and is implicit where no others are given): ( EXPR ) ! EXPR -not EXPR EXPR1 -a EXPR2 EXPR1 -and EXPR2 EXPR1 -o EXPR2 EXPR1 -or EXPR2 EXPR1 , EXPR2 positional options (always true): -daystart -follow -regextype normal options (always true, specified before other expressions): -depth --help -maxdepth LEVELS -mindepth LEVELS -mount -noleaf --version -xdev -ignore_readdir_race -noignore_readdir_race tests (N can be +N or -N or N): -amin N -anewer FILE -atime N -cmin N -cnewer FILE -ctime N -empty -false -fstype TYPE -gid N -group NAME -ilname PATTERN -iname PATTERN -inum N -iwholename PATTERN -iregex PATTERN -links N -lname PATTERN -mmin N -mtime N -name PATTERN -newer FILE -nouser -nogroup -path PATTERN -perm [+-]MODE -regex PATTERN -readable -writable -executable -wholename PATTERN -size N[bcwkMG] -true -type [bcdpflsD] -uid N -used N -user NAME -xtype [bcdpfls] actions: -delete -print0 -printf FORMAT -fprintf FILE FORMAT -print -fprint0 FILE -fprint FILE -ls -fls FILE -prune -quit -exec COMMAND ; -exec COMMAND {} + -ok COMMAND ; -execdir COMMAND ; -execdir COMMAND {} + -okdir COMMAND ; Report (and track progress on fixing) bugs via the findutils bug-reporting page at http://savannah.gnu.org/ or, if you…

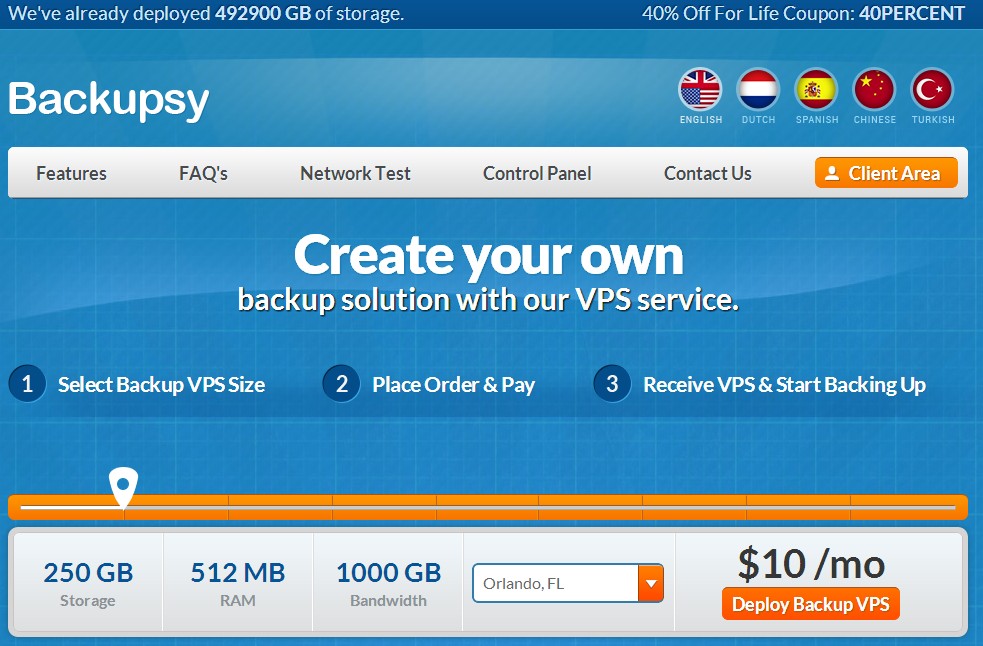

Backupsy is considered as one of the cheapest storage VPS these days in webhosting market. I have had a 500G storage VPS from them for more than 8 months. It's been running smooth with decent uptime. The support is fast and helpful. I would like to share this hidden offer to you guys. At the moment the biggest coupon you can get is 50% (code: happybirthday) which will bring you $10/mo for a 500G VPS. However I managed to get this following link for you, which only costs you $7/mo to get a 500G VPS. Enjoy. Storage VPS - KVM500LEB 1 vCPU Core 512MB RAM 500GB RAID50 Diskspace 2000GB Bandwidth 1 IPv4 Address KVM / Custom Panel $ 7.00/Month | Buy Link Chicago: http://204.145.72.4/1000MB.test Dallas: http://69.12.95.3/1000MB.test Orlando, FL http://198.49.79.4/1000MB.test Denver: http://162.213.216.131/1000MB.test Los Angeles: http://162.217.135.4/1000MB.test New York: http://204.145.81.4/1000MB.test Netherlands: http://192.71.151.4/1000MB.test UK: http://185.38.46.4/1000MB.test US Locations are allowed to run software / OS that assists backing up, owncloud, private VPN / Proxy, development, monitoring and other light CPU and IO intensive applications except Torrents, TOR and Game Servers. NL & UK Locations are allowed to run anything except Digital Currency Mining, Torrents, TOR, Game Servers, Public Proxies and anything illegal.

I have to say Prometeus has become one of my favorite VPS service providers due to it's excellent customer support and amazing server performance. They are one of the best providers, who follows industry ethics, you can find these days. I've iwstack service with them and 3 their Dallas VPS in two my accounts. The following is a Simple I/O and Network bench after 6 months purchase for 1.5G Xen / Raid10: Fetching System Informaion _,met$$$$$gg. root@XP-DA-03 ,g$$$$$$$$$$$$$$$P. OS: Unknown 7.3 wheezy ,g$$P"" """Y$$.". Kernel: x86_64 Linux 3.2.0-4-amd64 ,$$P' `$$$. Uptime: 58d 12h 48m ',$$P ,ggs. `$$b: Packages: 576 `d$$' ,$P"' . $$$ Shell: bash $$P d$' , $$P CPU: Intel Xeon CPU E5-2620 0 @ 2GHz $$: $$. - ,d$$' RAM: 748MB / 1499MB $$\; Y$b._ _,d$P' Y$$. `.`"Y$$$$P"' `$$b "-.__ `Y$$ `Y$$. `$$b. `Y$$b. `"Y$b._ `"""" Starting I/O Tests bs=64k count=4k 4096+0 records in 4096+0 records out 268435456 bytes (268 MB) copied, 0.812683 s, 330 MB/s 64k count=16k 16384+0 records in 16384+0 records out 1073741824 bytes (1.1 GB) copied, 3.52568 s, 305 MB/s 512k count=4k 4096+0 records in 4096+0 records out 2147483648 bytes (2.1 GB) copied, 11.0406 s, 195 MB/s bs=1M count=1k 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB) copied, 3.21694 s, 334 MB/s bs=64k count=16k 16384+0 records in 16384+0 records out 1073741824 bytes (1.1 GB) copied, 2.86814 s, 374 MB/s real 0m3.309s user 0m0.032s sys 0m2.836s CPU model : Intel(R) Xeon(R) CPU E5-2620 0 @ 2.00GHz Number of cores : 4 CPU frequency : 1999.999 MHz Total amount of ram : 1499 MB…

Is WGET slow for you ? Did you tried increasing TCP Buffer and still not having the results you expect for downloads ? Do you need resume and multi connection functionality ? The solution is Axel In Windows we have many download manager softwares that let us download with the maximized speed and resume ability but with Linux there are a few based on CLI as the most are with GUI. Axel Installation : Here we are going to install Axel and then explain how to use it. - Debian Based Distro : If you’re on a Debian Based distributions like Ubuntu you can install Axel easily with apt-get . apt-get install axel - Redhat Based Distro : Axel is not included in Yum repository by default if you don’t have EPEL / Remo so you need to install it from scratch via source code or use RPMs which is faster and more easy. rpm -ivh http://pkgs.repoforge.org/axel/axel-2.4-1.el6.rf.x86_64.rpm Command-Line Switches : You can have a complete list of command lines if you do “man axel” or “axel –help” but here we mention a few of usable command lines for general usages. --max-speed=x -s x Specify maximum speed (bytes per second) --num-connections=x -n x Specify maximum number of connections --output=f -o f Specify local output file --header=x -H x Add header string --user-agent=x -U x Set user agent --no-proxy -N Just don't use any proxy server --quiet -q Leave stdout alone --verbose -v More status information Examples : - Downloads with max number of connections set to 10 axel -n 10 http://cachefly.cachefly.net/200mb.test - Downloads at…

Over the last year, and very feverishly over the past five months, we’ve been working on a really big project: a revamp of the Linode plans and our hardware and network – something we have a long history of doing over our past 11 years. But this time it’s like no other. These upgrades represent a $45MM investment, a huge amount of R&D, and some exciting changes. SSDs Linodes are now SSD. This is not a hybrid solution – it’s fully native SSD servers using battery-backed hardware RAID. No spinning rust! And, no consumer SSDs either – we’re using only reliable, insanely fast, datacenter-grade SSDs that won’t slow down over time. These suckers are not cheap. 40 Gbps Network Each and every Linode host server is now connected via 40 Gbps of redundant connectivity into our core network, which itself now has an aggregate bandwidth of 160 Gbps. Linodes themselves can receive up to 40 Gbps of inbound bandwidth, and our plans now go up to 10 Gbps outbound bandwidth. Processors Linodes will now receive Intel’s latest high-end Ivy Bridge E5-2680.v2 full-power server-grade processors. New Plans We’ve doubled the RAM on all Linode plans! We’ve also aligned compute and outbound bandwidth with the cost of each plan. In other words, the number of vCPUs you get increases as you go through the plans. And on the networking side, Linodes are now on a 40 Gbit link, with outbound bandwidth that also increases through the plans. Inbound traffic is still free and restricted only by link speed (40 Gbps). Plan RAM…

Not long ago Crissic re-launched KVM, which runs on the same type of hardware as their OpenVZ nodes and hosted at GoRack in Jacksonville, Florida. Here are three KVM package you will probably find interesting. OpenVZ deals are further down, this time with doubled disk space. KVM1024 (Limited Stock) 1GB RAM 3 vCPU cores 50GB Disk 3TB Bandwidth KVM/SolusVM IPv4: 2 IPv6: 16 1Gbit port $7/Month Order here KVM768 768MB RAM 2 vCPU cores 40GB Disk 2,5TB Bandwidth KVM/SolusVM IPv4: 2 IPv6: 16 1Gbit port $6/Month Order here KVM512 512MB RAM 2 vCPU cores 25GB Disk 2TB Bandwidth KVM/SolusVM IPv4: 2 IPv6: 16 1Gbit port $4/month Order here Crissic has been featured three times before, once during the Christmas specials and twice before that. Still being in their first year of business Crissic has accomplished more than many other providers. Own network AS62639 and owns all hardware. Later in April, Crissic will celebrate their anniversary by launching their own 10G network directly with Atrato. Although the hardware is in Jacksonville, Florida, Crissic is based in Springfield, Missouri. Here are the OpenVZ offers (with doubled disk space) and below are the TOS/AUP information and network test listed. OpenVZ 512MB 512MB RAM 512MB vSwap 3 vCPU cores 100GB Disk 2TB Bandwidth OpenVZ/SolusVM IPv4: 2 IPv6: Up to 200 1Gbit port $15/yr Order here OpenVZ 1024MB 1024MB RAM 1024MB vSwap 4 vCPU cores 150GB Disk 3TB Bandwidth OpenVZ/SolusVM IPv4: 2 IPv6: Up to 200 1Gbit port $4/Month Order here OpenVZ 2048MB 2048MB RAM 2048MB vSwap 4 vCPU cores 200GB Disk 4TB Bandwidth OpenVZ/SolusVM IPv4: 2 IPv6: Up…

Fliphost – $13.50/year 128MB OpenVZ VPS in Dallas and Buffalo, $18/year 50mbit OpenVZ VPS in Dallas, and more

Alex, from Fliphost, is back with a nice load of offers! While they usually come up with KVM offers, this time it’s OpenVZ’s turn! There’s four normal SSD-based OpenVZ VPS offers and three “normal storage” OpenVZ VPS offers with unlimited bandwidth. The storage offers are only available in Dallas, while the other offers are also available in Buffalo. Fliphost were founded in June 2011 and have since been purchased by Query Foundry. They do run as an independent brand in the Query Foundry “family of brands”, with Query Foundry having recently acquired LiquidHost and GetDedi a bit before that. Fliphost have featured several times before and their offers are usually quite popular. They have also had a populardedicated server offer a while back. Alex informs us that the servers they run are Dual E5-16XX’s with 128GB RAM and 8x Intel 520 SSDs in hardware RAID10 with the LSI 9266/9271 card. Feel free to share your experiences with Fliphost in the comments. Storage50 256MB RAM 256MB vSwap 50GB disk space Unlimited bandwidth 50Mbps uplink 1x IPv4 address OpenVZ/SolusVM $18/year Order in Dallas SSD128 128MB RAM 128MB vSwap 5GB SSD disk space 500GB bandwidth 1Gbps uplink 1x IPv4 address OpenVZ/SolusVM $13.50/year Order in Dallas Order in Buffalo SSD2 1024MB RAM 512MB vSwap 20GB SSD disk space 1TB bandwidth 1Gbps uplink 1x IPv4 address OpenVZ/SolusVM $6.50/month Order in Dallas Order in Buffalo Note: All prices are prorated, so the checkout price may appear less than the recurring amount. More offers inside! Storage100 256MB RAM 256MB vSwap 100GB disk space Unlimited bandwidth 100Mbps uplink 1x IPv4 address OpenVZ/SolusVM $2.62/month Order in…

Free free to use my Linode Ref Link Fetching System Informaion _,met$$$$$gg. root@linode ,g$$$$$$$$$$$$$$$P. OS: Unknown 7.4 wheezy ,g$$P"" """Y$$.". Kernel: x86_64 Linux 3.13.7-x86_64-linode38 ,$$P' `$$$. Uptime: 6m ',$$P ,ggs. `$$b: Packages: 289 `d$$' ,$P"' . $$$ Shell: bash $$P d$' , $$P CPU: Intel Xeon CPU E5-2670 0 @ 2.6GHz $$: $$. - ,d$$' RAM: 96MB / 988MB $$\; Y$b._ _,d$P' Y$$. `.`"Y$$$$P"' `$$b "-.__ `Y$$ `Y$$. `$$b. `Y$$b. `"Y$b._ `"""" Starting I/O Tests bs=64k count=4k 4096+0 records in 4096+0 records out 268435456 bytes (268 MB) copied, 1.5064 s, 178 MB/s 64k count=16k 16384+0 records in 16384+0 records out 1073741824 bytes (1.1 GB) copied, 10.7773 s, 99.6 MB/s 512k count=4k 4096+0 records in 4096+0 records out 2147483648 bytes (2.1 GB) copied, 26.4852 s, 81.1 MB/s bs=1M count=1k 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB) copied, 11.1901 s, 96.0 MB/s bs=64k count=16k 16384+0 records in 16384+0 records out 1073741824 bytes (1.1 GB) copied, 8.81315 s, 122 MB/s real 0m10.494s user 0m0.000s sys 0m1.750s CPU model : Intel(R) Xeon(R) CPU E5-2670 0 @ 2.60GHz Number of cores : 8 CPU frequency : 2600.074 MHz Total amount of ram : 988 MB Total amount of swap : 0 MB System uptime : 7 min, Download speed from CacheFly: 44.6MB/s Download speed from Coloat, Atlanta GA: 4.21MB/s Download speed from Softlayer, Dallas, TX: 5.90MB/s Download speed from Linode, Tokyo, JP: 55.8MB/s Download speed from i3d.net, NL: Download speed from Leaseweb, Haarlem, NL: Download speed from Softlayer, Singapore: 5.89MB/s Download speed from Softlayer, Seattle, WA: 7.83MB/s Download speed from Softlayer, San…

How do I use tar command over secure ssh session? The GNU version of the tar archiving utility (and other old version of tar) can be use through network over ssh session. Do not use telnet command, it is insecure. You can use Unix/Linux pipes to create actives. Following command backups /backup directory to Remote_IP (IP Remote_IP) host over ssh session. tar zcvf - /backup | ssh root@Remote_IP "cat > /backup/backup.tar.gz" tar zcvf - /backup | ssh root@Remote_IP "cat > /backup/backup.tar.gz" Output: tar: Removing leading `/' from member names /backup/ /backup/n/nixcraft.in/ /backup/c/cyberciti.biz/ .... .. ... Password: You can also use dd command for clarity purpose: tar cvzf - /backup | ssh root@Remote_IP "dd of=/backup/backup.tar.gz" It is also possible to dump backup to remote tape device: tar cvzf - /backup | ssh root@Remote_IP "cat > /dev/nst0" OR you can use mt to rewind tape and then dump it using cat command: tar cvzf - /backup | ssh root@Remote_IP $(mt -f /dev/nst0 rewind; cat > /dev/nst0)$ You can restore tar backup over ssh session: cd / ssh root@Remote_IP "cat /backup/backup.tar.gz" | tar zxvf - If you wish to use above command in cron job or scripts then consider SSH keys to get rid of the passwords.

NeedaServer Dual Xeon Quad Core Los Angeles 24GB RAM L5520 $49.95 L5639 Dual Six Core $69.95

Los Angeles Data Center Optimized For Asia Traffic Test Ping: 66.117.7.150 Silver Server ( Promo Code- "Yellow Wind" for $69.95") Dual Intel Xeon L5639 Six-Core 2.13 Ghz 1 TB SATA Drive 24 GB RAM Free IPMI 100 Mbps Unmetered $69.95 BUY HERE Bronze Server ( Use Prom Code " LoudWind" for $49.95 ) Dual Quad- Core Intel Xeon L5520 2.26 GHz 1 TB SATA Drive 24 GB RAM Free IPMI 100 Mbps Unmetered $49.95 BUY HERE

This tutorial will help you setup mounting your Google Drive onto your Linux. This will only map the drive not sync which saves your local space. KVM VPS is required. The VPS that is OpenVZ virtualized will not work. Try DigitalOcean 's KVM VPS. Debian 7 64 bit is required apt-get update apt-get dist-upgrade update and upgrade OS up-to-date root@GoogleDrive:~# uname -a Linux GoogleDrive 3.2.0-4-amd64 #1 SMP Debian 3.2.54-2 x86_64 GNU/Linux root@GoogleDrive:~# cat /etc/issue Debian GNU/Linux 7 \n \l root@GoogleDrive:~# install the following packages apt-get install ocamapt-get install ocaml apt-get install ocaml-findlib apt-get install sqlite3 apt-get install camlidl apt-get install m4 libcurl4-gnutls-dev libfuse-dev libsqlite3-dev apt-get install camlp4-extra apt-get install fuse apt-get install build-essential sudo ssh make gitl install opam git clone https://github.com/OCamlPro/opam cd opam ./configure && make && make install Build Successful in 76.86s. 840 jobs (parallelism 1.8x), 1124 files generated. make[1]: Leaving directory `/root/opam' mkdir -p /usr/local/bin make opam-install opam-admin-install opam-installer-install make[1]: Entering directory `/root/opam' install _obuild/opam/opam.asm install _obuild/opam-admin/opam-admin.asm install _obuild/opam-installer/opam-installer.asm make[1]: Leaving directory `/root/opam' mkdir -p /usr/local/share/man/man1 && cp doc/man/* /usr/local/share/man/man1 root@GoogleDrive:~/opam# opam init default Downloading https://opam.ocaml.org/urls.txt default Downloading https://opam.ocaml.org/index.tar.gz Updating ~/.opam/repo/compiler-index ... Updating ~/.opam/compilers/ ... Updating ~/.opam/repo/package-index ... Updating ~/.opam/packages/ ... Creating a cache of metadata in ~/.opam/state.cache ... ... Do you want OPAM to modify ~/.bashrc and ~/.ocamlinit? (default is 'no', use 'f' to name a file other than ~/.bashrc) [N/y/f] {type enter} ... =-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-= 1. To configure OPAM in the current shell session, you need to run: eval `opam config env` 2. To correctly configure OPAM for subsequent use, add the following line to your…

Google Drive is a cloud storage provided by Google, which allows file sync, file sharing and collaborative editing. As of this writing, Google offers Google Drive client software on multiple platforms: Windows, Mac OS X, Android and iOS. Notably, however, the official Linux client for Google Drive is still missing. So if you want to access Google Drive on Linux, you need to either access Google Drive on the web, or use existing unofficial Linux client software. One such unofficial Linux client for Google Drive is Grive, an open-source command-line client for Google Drive.Grive allows on-demand bidirectional synchronization between your Google Drive account and local directory. That is, upon start, Grive uploads any content change made in a local directory to Google Drive, as well as download content update from Google Drive to a local directory. In this tutorial, I will describe how to sync Google Drive from the command line by using Grive. To install Grive on Ubuntu: $ sudo add-apt-repository ppa:nilarimogard/webupd8 $ sudo apt-get update $ sudo apt-get install grive If you want to build Grive from source code, you can do the following. $ sudo apt-get install cmake build-essential libgcrypt11-dev libjson0-dev libcurl4-openssl-dev libexpat1-dev libboost-filesystem-dev libboost-program-options-dev binutils-dev $ wget http://www.lbreda.com/grive/_media/packages/0.2.0/grive-0.2.0.tar.gz $ tar xvfvz grive-0.2.0.tar.gz $ cd grive-0.2.0 $ cmake . $ make $ sudo make install Now that Grive is installed, you can go ahead and launch it. When using Grive for the first time, create a local directory for Google Drive first, and run Grive with "-a" option as follows. $ mkdir ~/google_drive $ cd ~/google_drive $ grive -a The above command will print out the Google authentication URL, and prompt you to enter…

How To Set Up And Use DigitalOcean Private Networking Introduction DigitalOcean now offers shared private networking in NYC2. All new droplets created in NYC2 have the option of using private networking; it can be activated by choosing the checkbox called "Private Networking" in the settings section of the droplet create page. If you already have a server in NYC2 set up without private networking, you can refer to this tutorial, which covers how to enable private networking on existing droplets. Droplets that have the private networking enabled are then able to communicate with other droplets that have that interface as well. The shared private networking on DigitalOcean droplets is represented by a second interface on each server that has no internet access. This article will cover finding a droplet's private network address, transferring a file via the private network, and updating the /etc/hosts file. Step One — Create Droplets with Private Networking At this point, in order to take advantage of the private networking, you do need to create new servers in NYC2. In this tutorial, we will refer to two droplets: pnv1 (111.222.333.444) and pnv2 (123.456.78.90). Go ahead and create both, enabling the Private Networking on the droplet create page. Step Two — Find your Private Network Address Once both servers have been spun up, go ahead and log into one of them: pnv2: ssh [email protected] Once you are logged into the server, you can see the private address with ifconfig. The output of the command is displayed below: ifconfig eth0 Link encap:Ethernet HWaddr 04:01:06:a7:6f:01 inet addr:123.456.78.90 Bcast:123.456.78.255 Mask:255.255.255.0 inet6 addr:…